Converging on a Path Forward

Developed a path tracer, dinner with Mark Shoening and a dead link rug!

Last month I’ve felt a bit burned out after pushing myself beyond my comfort zone technically and facing weeks of hard challenges. I relish my independence, but at times it can feel daunting when I’m faced with a challenge and have no colleagues to bounce ideas off of or get opinions on a new approach. I suppose I should be thankful ChatGPT has been there for me to help point me in directions I was unaware of, but suffice to say I’ll be glad to move into next week and resume more creative tasks!

Raymarch Path Tracer

The big project that has been consuming me lately has been developing a path tracer. I’ve used several commercial renderers in Maya / Blender and love how even throwing some simple geometry and a few area lights into a scene can make it feel amazing.

I had no idea how they worked beyond “they bounce rays around” in a scene. I started my journey by going through the Ray Tracing in One Weekend series in C++. It took me a bit longer than a weekend… okay, it was about a week of full time effort in total, but I came out the other end understanding roughly how it works!

What followed were several weeks of frustrating effort trying to adapt what I learned in C++ operating on triangle geometry with all the memory pointers in the world to a raymarch scene developed entirely within a GLSL fragment shader. Reviewing other similar examples on ShaderToy was helpful to get started and then I made extensive use of ChatGPT to better understand many of the functions relating to topics such as probability distribution functions, geometry attenuation, Schlick approximation, etc.

There are parts of the code I don’t yet fully understand, but I managed to implement a Disney style BSDF shader, including a subsurface scattering method, as seen where the light bleeds through the sphere on the middle right.

I hit so many problems getting to this image, such as random black pixels popping up due to divide by zero errors somewhere in the code. In the end I got it working though and now I can render a relatively clean image in a few hours in the browser!

So what am I going to do with it?

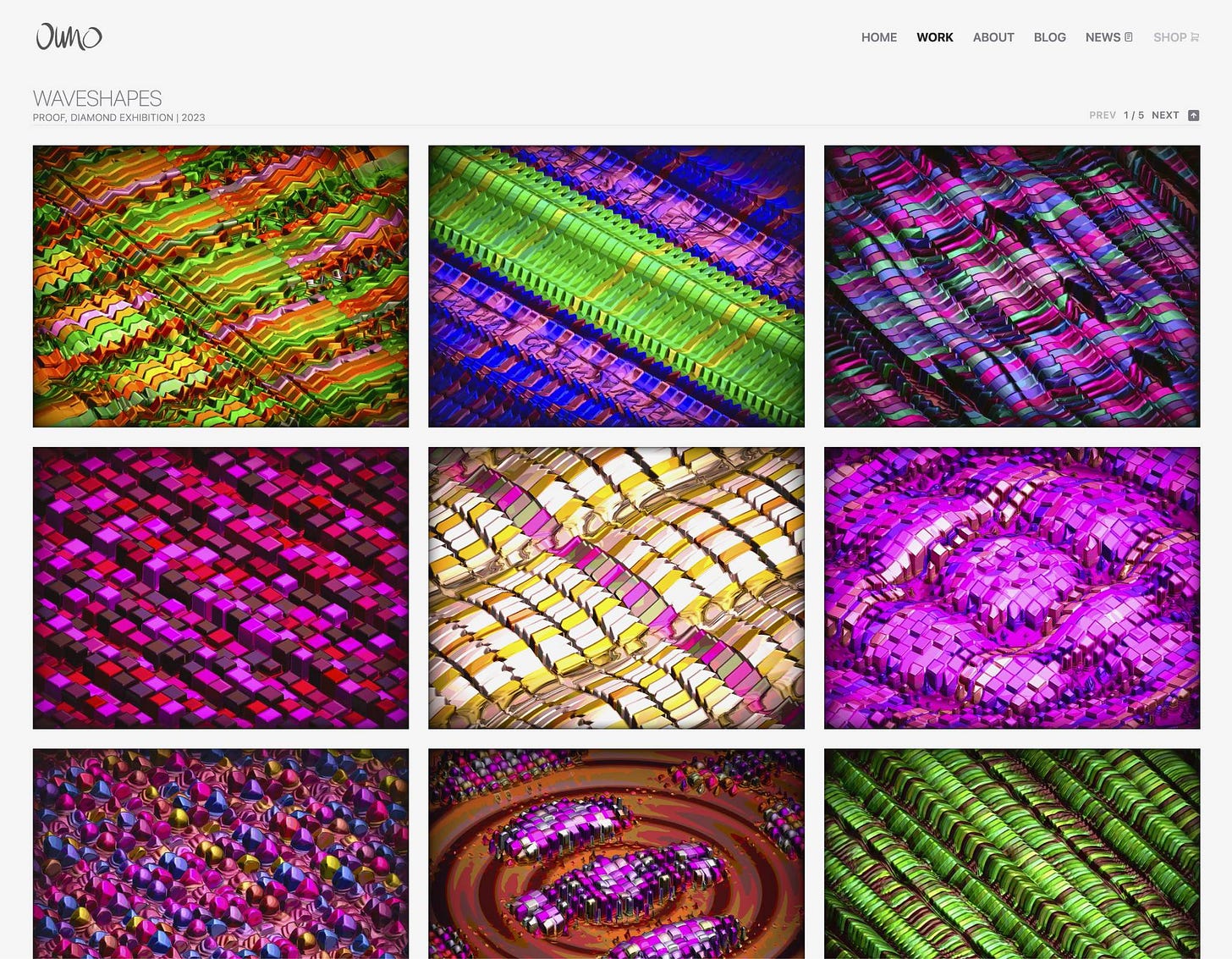

I personally love how my WaveShapes collection looks, but I could not crank up the quality to get the smooth look I originally intended since it was designed for realtime. I was left wondering what it might look if optimization were not a concern. I’ll be using my path tracer to adapt this project into something new intended for a limited run of high quality prints. I’ve managed to get a debugging version working already that is looking quite promising with the soft shadows and lens blur giving it lovely depth.

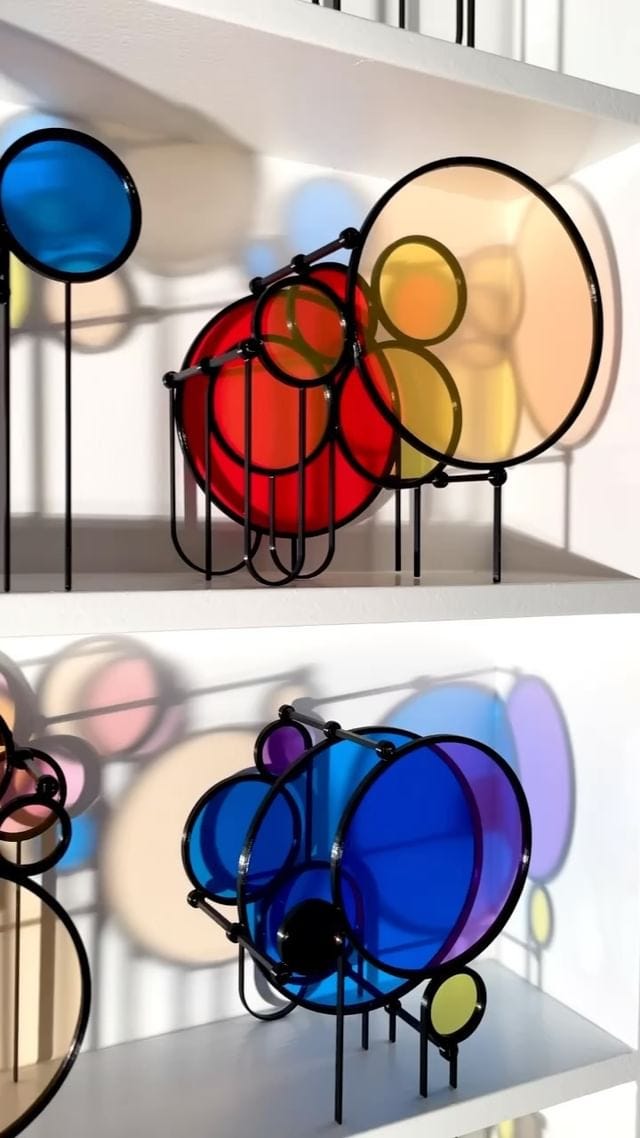

I also had the pleasure of meeting Mark Schoening for dinner while he was traveling to my city and we got to talking all things creative. We’ve been collaborating recently on a project called Cycles, which is exploring how to adapt a set of design ideas he’s envisioned into a generative system. As of right now, we’ve got a functional prototype that can generate a form and export printable STL files. He’s been building a shelf of sculptures generated directly from the code I’ve written.

A key quality of the project is in how it uses colored lenses to cast mixed light onto the surfaces around it. Once we’ve found a manner in which we’d like to present them in real life, my intent is to adapt the path tracer to handle WebGL geometry and be able to render out high quality stills that simulate a similar look and feel to what you’d get if you were to print off any given generation. Check out Mark’s video here:

We’re still actively exploring the system and iterating on what it could do to further hit a balanced aesthetic that still has a chance for pleasant surprises. More on that later :)

Web Gallery

Art Blocks rugged me! Okay… that’s a bit inflammatory, I’m just poking a bit of fun at them. But I did discover one day that they quietly de-listed a bunch of projects from their marketplace (formerly Sansa), which was a bit frustrating as it meant I had a dead link to what I thought would be a perpetually available AB Engine project.

I’ve always wanted my collections to have galleries on my website; full control of the presentation and no reliance on external parties. Thus, I’ve rendered out full quality images, updated my studio API to query on-chain data and offer live generator URLs, and have worked hard to make a simple and friendly gallery UX for most of my work.

Prints Soon™

Getting close to offering prints for sale! My poor Mac Mini has been generating high resolution artwork for weeks now, in preparation to offer “mystery packs” for my small sizes unlimited “out of bounds” prints. I’ve sent out some test proofs to family and friends to validate the packaging and materials, so I think I have it mostly ironed out.

I’ll likely do a soft-launch of my shop soon (a couple weeks?) followed by an official announcement later when I have my first set of limited prints ready for collectors, like the proofs I now have on my wall.

By the way, if you really want to know when my shop goes live please reach out and I’ll email / message you privately when it’s up. That’s all for today.

Thank you for taking the time to read and your continued support!

Owen